A Simple Introduction to the AWS ENA Driver: How Enhanced Networking Improves Performance

The AWS ENA (Elastic Network Adapter) driver is a high-performance network interface driver developed and used by AWS to support high-bandwidth, low-latency networking in EC2 instances. ENA is designed to provide network speeds up to 100 Gbps on EC2 instances that require fast and reliable network connectivity, like high-performance computing (HPC) workloads, big data analytics, and machine learning.

Purpose of the ENA Driver

The AWS ENA driver is responsible for enabling and controlling Elastic Network Interfaces (ENIs) on EC2 instances. These ENIs are virtual network interfaces that allow instances to communicate within AWS and with the internet, supporting features such as:

- Enhanced Network Performance

- The ENA driver supports high throughput, allowing instances to handle network speeds of up to 100 Gbps on supported instance types.

- It enables low latency networking, which is crucial for applications that require fast, real-time data transmission, such as financial trading platforms, gaming, or big data processing.

2. Scalability

- ENA supports scaling to handle millions of packets per second (PPS), making it suitable for high-performance workloads and applications that require processing of a large amount of network traffic.

3. Multi-Queue Support

- ENA uses multiple transmit (TX) and receive (RX) queues to distribute network processing across multiple vCPUs, which helps to maximize throughput and improve overall efficiency on multi-core instances.

4. Bypassing the hypervisor

- Mechanism called Single Root I/O Virtualization, allows a VM (Virtual Machine) to directly access network hardware, bypassing the hypervisor. This results in reduced overhead, improved packet handling, and lower network latency.

5. Enhanced Network Security and Extensibility

- The ENA driver supports network features that enable security enhancements such as VPC traffic mirroring for deep packet inspection, monitoring, and debugging of network traffic.

- New custom functionalities can be implemented by using ENA driver support for XDP (eXpress Data Path), allowing the integration of eBPF programs.

How ENA driver works

The ENA driver works by enabling direct communication between the EC2 instance and the ENA hardware on the underlying AWS infrastructure. It handles all the operations necessary for sending and receiving packets, queue management, interrupt handling, and more.

1. Instance Initialization

- When an EC2 instance is launched with ENA-enabled network interfaces, the ENA driver is loaded as part of the operating system.

- The driver communicates with the underlying ENA hardware on the AWS hypervisor via the PCIe bus, making the Elastic Network Interface (ENI) available to the guest OS.

2. Network queues, Interrupt handling and Descriptor Rings

- The ENA driver uses multiple transmit (TX) and receive (RX) queues. These queues allow parallel processing of packets, distributing the load across multiple CPU cores, thereby improving throughput.

- This multi-queue architecture allows both packet transmission and reception to scale with the number of vCPUs on the instance, leading to better performance on larger instances.

To reduce the overhead caused by interrupts, ENA supports interrupt moderation:

- Adaptive Interrupts: Rather than generating an interrupt for every received or transmitted packet, interrupts are grouped together to reduce the number of CPU interrupts.

- This reduces CPU load and increases throughput, especially under heavy network traffic conditions.

Each TX and RX queue maintains descriptor rings, which are circular buffers used to store metadata about packets being sent or received.

The ENA driver works with the NIC to populate and process these rings:

- The driver adds entries to the TX descriptor ring for outgoing packets.

- The NIC fetches data, transmits it, and then notifies the driver (via interrupt or polling) that the packet has been sent.

- For incoming packets, the NIC writes packet data into the RX ring, which the ENA driver processes and delivers to the guest operating system’s networking stack.

3. Offloading mechanisms

There are numbers of offloading mechanisms which ENA implements in order to make network packet handling more efficient:

Checksum Offloading

- The ENA driver supports checksum offloading, where the NIC hardware calculates and verifies checksums for TCP, UDP, and IP packets instead of the CPU. This reduces the CPU overhead for handling large volumes of network traffic.

Large Receive Offload (LRO) and Generic Receive Offload (GRO)

- LRO aggregates multiple incoming TCP segments into a single large segment before passing it to the operating system. This reduces the number of packets processed by the kernel and lowers CPU usage.

- GRO is a more flexible variant, which performs the same aggregation function but works at the software level. Both LRO and GRO help reduce the CPU load when dealing with large amounts of incoming network traffic.

TCP Segmentation Offload (TSO)

- TSO allows the CPU to offload the task of splitting large chunks of data into smaller TCP segments (typically 1500 bytes for standard Ethernet frames). The ENA NIC handles this segmentation, allowing the CPU to offload this resource-intensive task and improving throughput.

Scatter-Gather I/O (SGIO)

- SGIO, sometimes known as a Vectored I/O, allows the system to transmit or receive multiple buffers in a single I/O operation, reducing the number of memory copies required and improving network performance for large packets.

3. Packet Processing

For Transmitting (TX) Packets:

- The ENA driver receives a network packet from the upper layer of the network stack in the guest operating system (e.g., from a TCP/IP application).

- It places the packet in a transmit (TX) descriptor ring, which is mapped to the VF (virtual function) representing the ENA NIC.

- The ENA NIC, through SR-IOV, accesses the data in the TX descriptor ring and sends the packet over the physical network.

- Once the packet is transmitted, the NIC writes back to the descriptor ring to indicate that the packet has been successfully sent, and the ENA driver processes the completion.

For Receiving (RX) Packets:

- When a packet is received on the physical NIC, it is placed into the RX descriptor ring, which is mapped to the EC2 instance’s ENA virtual function (VF).

- The ENA driver processes the packet from the RX ring and passes it up to the operating system’s network stack.

- If interrupt moderation is enabled, multiple packets can be processed in batches to reduce interrupt load.

4. Scaling and Resource Allocation

- ENA driver is optimized for AWS’s large instance types, which require massive networking capacity (up to 100 Gbps).

- As the network demand increases, the ENA driver dynamically manages network queues and allocates resources such as CPU and memory to maintain optimal throughput and low latency.

- The ENA driver supports Receive Side Scaling (RSS), which allows network traffic to be split across multiple CPU cores by hashing (usually 4-tuple for TCP and 2-tuple for UDP) incoming packets and distributing them among multiple RX queues. This prevents bottlenecks on a single CPU core for heavy network loads.

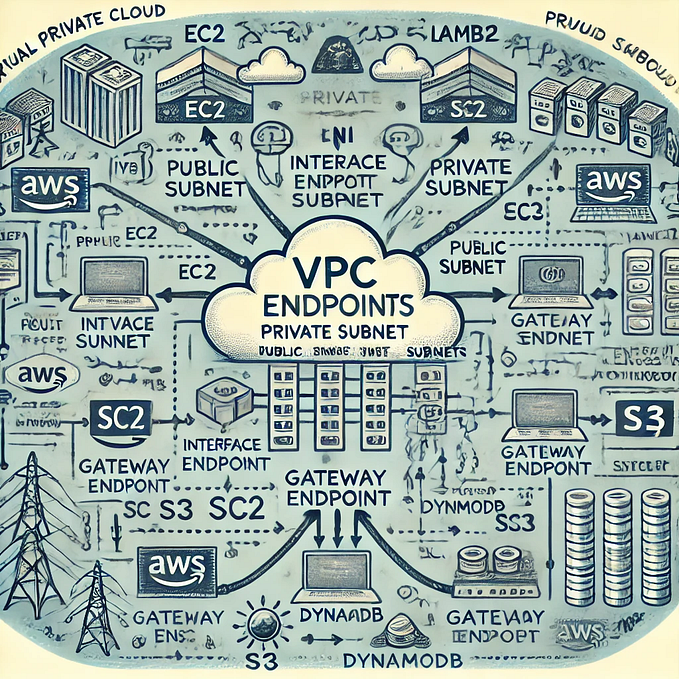

5. Single Root I/O Virtualization (SR-IOV)

- SR-IOV allows AWS to create, so called, Virtual Functions (VFs) from a single physical NIC, assigning these VFs directly to EC2 instances.

- The ENA driver in the EC2 instance interacts directly with these VFs, providing direct access to the network hardware.

- This setup bypasses the hypervisor for most network processing, leading to lower latency, higher throughput, and reduced CPU overhead.

- The combined use of SR-IOV and ENA is especially beneficial for high-performance and network-sensitive workloads, ensuring consistent and reliable network performance in the AWS cloud environment.

- It is important to note, that while ENA uses SR-IOV to provide direct access to the underlying NIC hardware, some interaction with the hypervisor still occurs, especially for control plane operations (e.g., configuring network settings, virtual NIC creation). However, for data plane operations (sending and receiving packets), the hypervisor is largely bypassed, which helps achieve low-latency and high-performance networking.

6. Link Aggregation and Failover

- ENA also supports failover mechanisms to ensure high availability. If the underlying physical interface on the AWS side fails, the ENA driver can reroute traffic to another physical interface with minimal impact on connectivity.

- This capability ensures that applications remain available even in the event of hardware failures in the underlying infrastructure.

7. Monitoring and Management

ethtool and other Linux network utilities can be used to manage and monitor the ENA driver on EC2 instances:

- ethtool -i <interface>: View driver version and details.

- ethtool -g <interface>: View and modify the RX/TX ring buffer sizes.

- ethtool -k <interface>: Display offload capabilities (TSO, LRO, checksum offload, etc.).

- ifconfig or ip a: Display interface details and statistics.

AWS also offers CloudWatch metrics for monitoring network performance (e.g., throughput, packet drops), which can be combined with instance-level statistics for a complete picture of network health.

Use Cases for ENA

Big Data Applications: Applications that require fast data transfers between nodes, such as Hadoop, Apache Spark, or machine learning workloads.

Network-Intensive Applications: Use cases like content delivery networks (CDNs), media streaming, and high-frequency trading, which require low-latency, high-throughput networking.

Distributed Databases: Large distributed systems like Cassandra, MongoDB, or MySQL clusters, where high network performance and low-latency communications between nodes are critical.

Virtual Network Appliances: Virtual firewalls, load balancers, or VPNs can benefit from ENA’s ability to handle millions of packets per second with minimal latency.

Comparison to Other Drivers

- ENA vs. Intel ixgbe/ixgbevf: The Intel

ixgbeandixgbevfdrivers are commonly used for Intel-based NICs in AWS. Nevertheless, ENA is purpose-built for AWS cloud environments and offers better scalability, lower latency, and higher throughput on supported instance types. - ENA vs. DPDK: ENA operates at the kernel level, while DPDK (Data Plane Development Kit) is a user-space framework for fast packet processing. DPDK can be used with ENA network interfaces by using a Poll Mode Driver (PMD) that interacts with the ENA hardware.

In Plain English 🚀

Thank you for being a part of the In Plain English community! Before you go:

- Be sure to clap and follow the writer ️👏️️

- Follow us: X | LinkedIn | YouTube | Discord | Newsletter | Podcast

- Create a free AI-powered blog on Differ.

- More content at PlainEnglish.io